Performance won’t improve unless we can go beyond measurement. Measuring results is only a small part of the challenge. The bigger challenge has to do with making sense out of these results and using them to address problems and develop strategies to improve the situation.

You have designed and implemented an effective learning assessment. You have navigated all the hurdles of administering it, you have gathered the results, and a team of statisticians has spent months analysing the information. You even have a report, and you have discovered some major learning disparities—perhaps along gender lines, or by region.

Now what? Can anyone make sense of the results? Are they doing anything to go beyond the analyses?

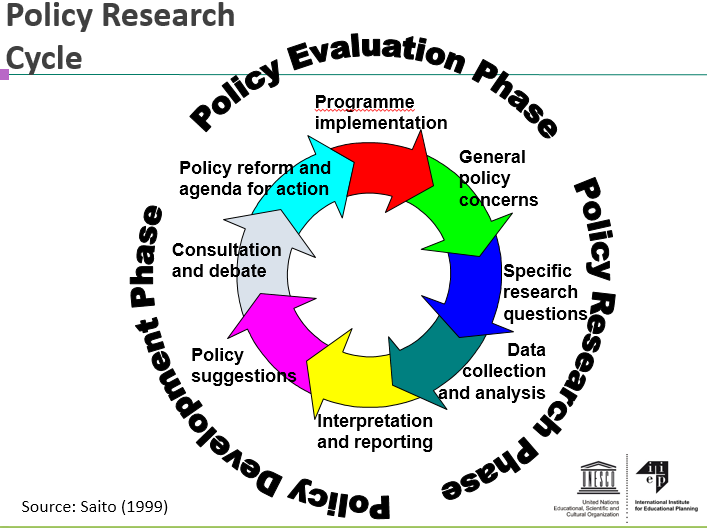

In theories of education policy-making, data collection and analysis usually appears as one smoothly integrated step in an ongoing process (see Figure 1). But a recent informal IIEP survey shows that reality often doesn’t work that way.

Some 300 participants[1] in the 2016 IIEP MOOC on Learning Assessments responded to a survey that was provided as an optional activity for the course.[2] They answered a series of questions on whether they are informed about learning assessment data and whether they participated in different aspects of its assessment activities.

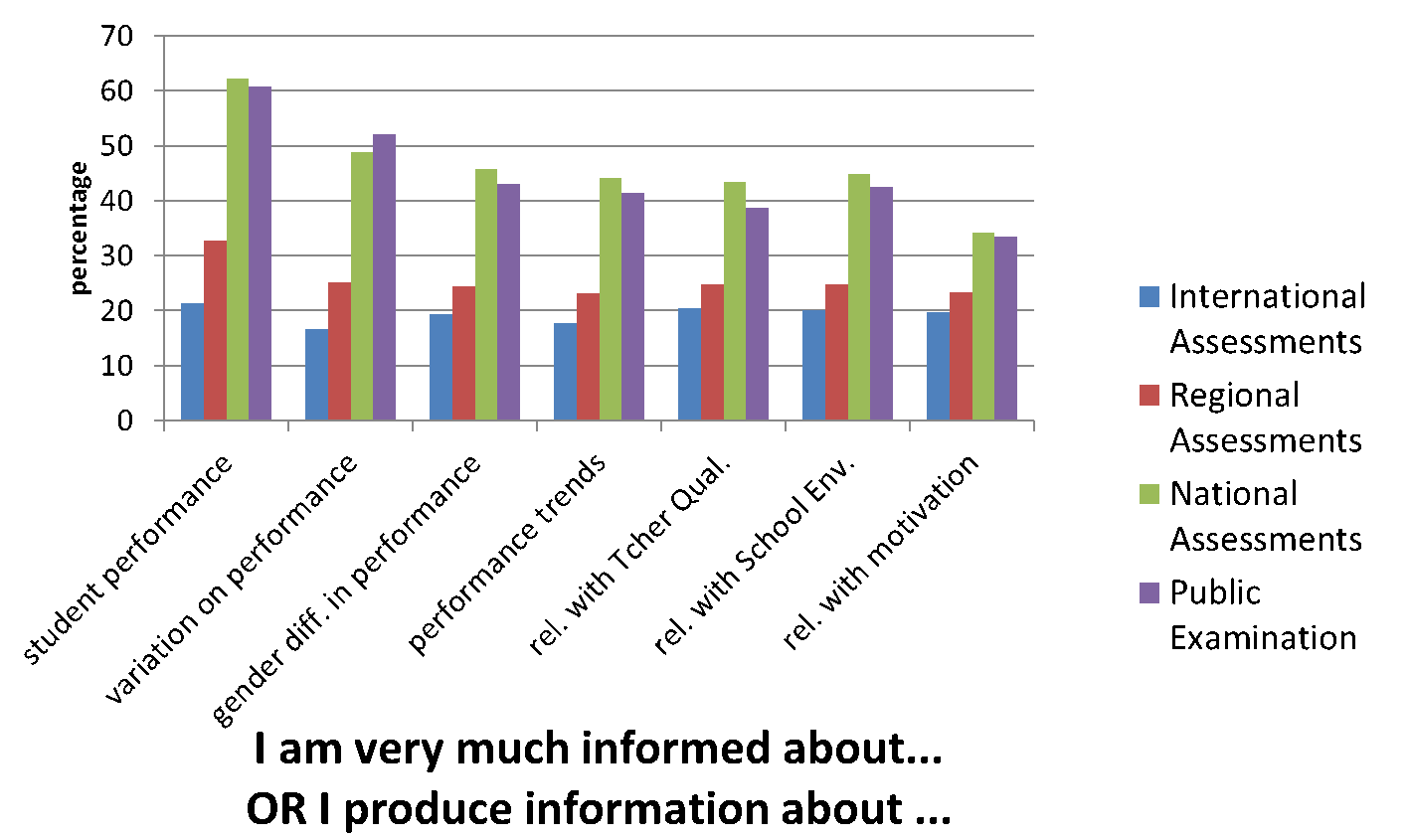

This was a population that already had a high interest in learning assessment data—after all, they had participated actively in an online course on the issue. But the survey data revealed a different picture. Barely a majority of participants felt they were very informed and/or had produced information about the overall level of student performance - 62% with regards to national assessments and 61% with regards to public examinations.

And the numbers dropped sharply when asking about more detailed aspects of interpreting the assessment data (see Figure 2). Focusing on their familiarity with national assessment data, for example, less than 49% were aware of the variation in student performance and only 46% were aware of gender differences. Just 44% knew of the trends in student performance over time, and only a minority felt they had a grasp of the relationship with the school environment (43%), teacher quality (45%) and student motivation (34%). Familiarity with public examinations data was in general slightly lower than these figures, and with regards to familiarity with international and regional assessments, the percentages were cut in half again.

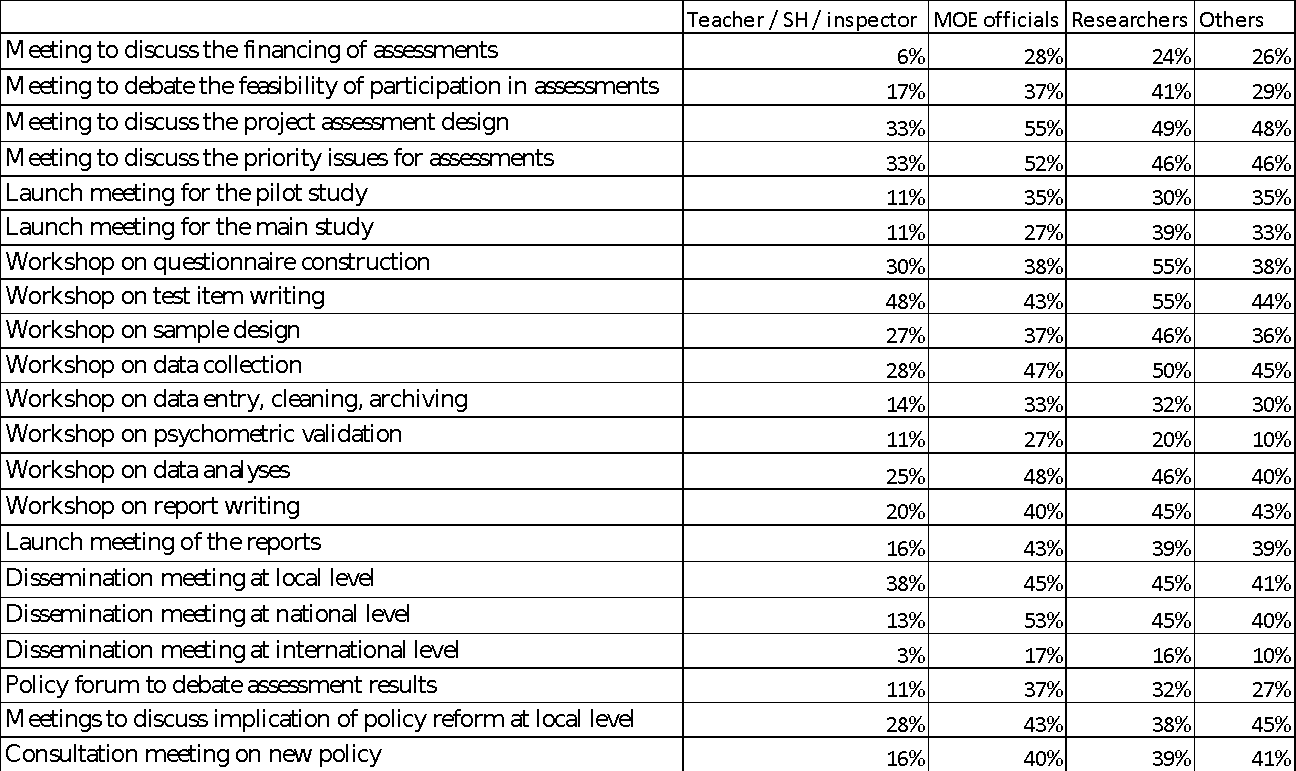

Among the many phases of assessment design, implementation, and reporting included in the survey, the percentages were similarly low for participation in meetings where assessment results were debated in relation to local or national policy reform. Only around 40% of Ministry officials and researchers had participated in such settings, and less than 30% of local education stakeholders such as teachers, school heads, and inspectors had done so (see Table 1).

In other words, even those who are very interested in assessment data are not highly aware of its implications for policy and practice in their own contexts.

A number of years ago, Carol Weiss wrote an influential article about the many meanings of research utilization. Her model still holds lessons for us today, as we think about the question of what to do with assessment data. Of all the possible ways research may impact public policy, Weiss argued that the most frequent is through an indirect process of “enlightenment” where the concepts and theoretical models of relationships and change gradually “permeate the policy-making process”, eventually “coming to shape the way in which people think about social issues”.[3]

In order for assessment data to accurately shape decision-makers' ideas about education quality, they need access to the information—not just once, but multiple times, not only after the assessment but before and during the design phase, and on multiple different opportunities for thinking through the data and processing its implications.

In the IIEP survey, the participants were also asked about what sources of information they use to learn about assessment results. Many different sources are possible, such as official documents, mass media reports, conference presentations, training programmes, informal conversation, and direct observation, etc. Official documents were the most used by all the stakeholders – even by the teachers. The mass media and informal conversations were the next most used sources of information by all the stakeholders. Surprisingly, policy briefs, which are meant to highlight key findings and provide potential policy pathways for policy makers, were not as widely used, even by Ministry officials.

The results from this informal survey show that education stakeholders can help ensure that learning assessment data shapes public decision-making by building synergies among information producers and users. Researchers need to play the role of information brokers, not only producing information but also thinking about how to share it through multiple channels.

When assessment data is used to sensitize and inform public opinion, it provides orientation for public reflection on the state of the education system and its future. Those moments of public reflection, in turn, provide space for re-considering the ideals at the foundation of the system and re-evaluating the strategies for achieving those ideals. Through a gradual process of percolation, as Carol Weiss argued, new ways of thinking can come to the fore along with a new level of motivation for action.

Notes

[1] Participants included teachers, school heads, inspectors, researchers, Ministry of Education officials, and others. Respondents were 54% female, 46% male.

[2] The survey was voluntary and did not count towards the course grade.

[3] Weiss, C. 1979. The many meanings of research utilization. Public Administration Review. Vol 39, No. 5, p. 429

Based on a presentation made at the Educaid.be Annual Conference 2016.